Dear Readers,

With the continuous interest in AI amongst our partners, we’ve dedicated this month’s insights to share about our experiment concerning the usage of AI (Artificial Intelligence) for information retrieval. Please kindly note that this experiment is still ongoing, led by our internal digital & medical writing teams.

Without further ado, let’s dive in!

Throughout 2024, the topic of AI’s role in medical writing emerged on numerous occasions during our communication with our partners. They wanted to know whether AI can assist in any way in a broad context.

Our main purpose of the experiment was to test the accuracy of the output & references, along with the response time.

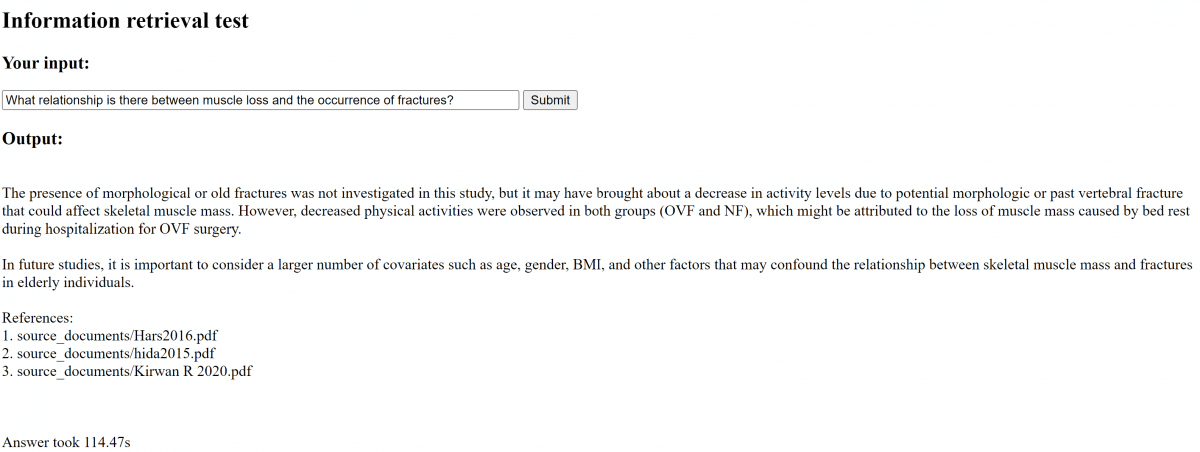

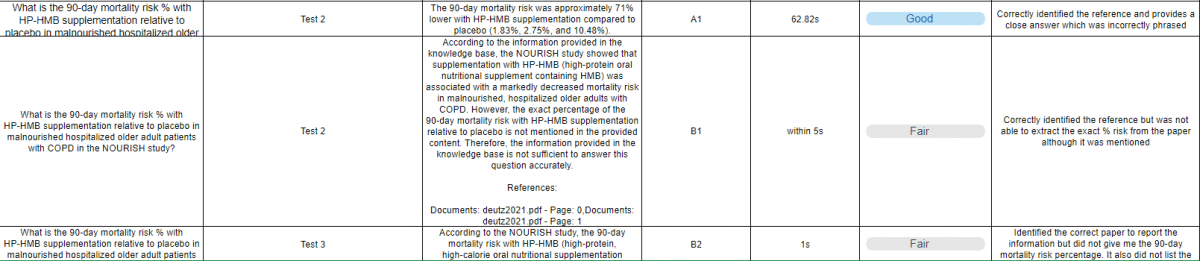

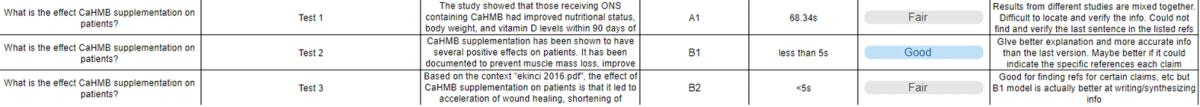

We adopted a Large Language Model (LLM) with limited documentation input, open source models & self-hosting. Here were the main observations from our first test with 12 inputs, using the model “A1”:

Time for major changes!

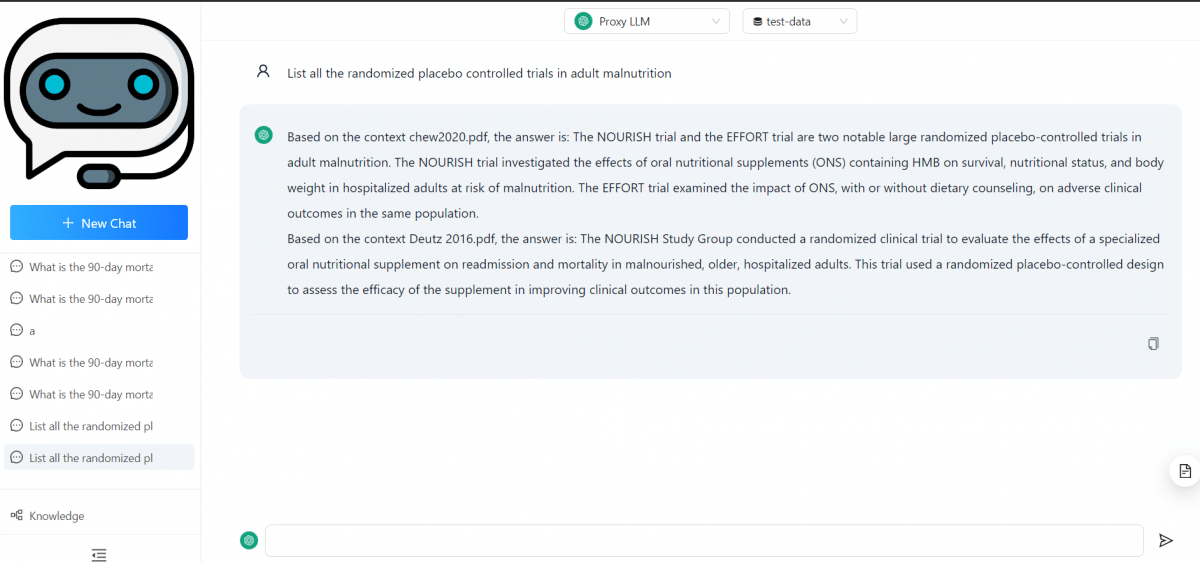

After 1.5 months, we made 2 key changes for the newly revamped model “B1”: Using OpenAI directly and hosting on a dedicated server. We tested using the same 12 inputs:

Overall, model “B1” was better than model “A1” but it still fell short in being specific. Slight modifications are still needed.

This time, our digital team made some slight tweaks for our medical writers to test model “B2” using the same 12 inputs. We’re using OpenAI in Azure (instead of directly through OpenAI) and answers were supposed to be separated per paper. These were our main observations:

While we’re still making further tweaks and running further tests, it is inconclusive (as of today) whether AI can assist in information retrieval. It did show some promises, but the output & referencing needs to be extremely accurate. We’ll continue to provide further updates in due time!

For any clarifications, please send an email to: michael.phee@drcomgroup.com | marketing@drcomgroup.com